一套ORACLE 11c R2 Windows环境使用import 导入一张包含blob列时速度非常的慢(平均每秒10条),大家都知道imp里因为不能使用parallel等其它原因导入是慢一些,主要等待事件和时间是control file parallel write和enq: CF – contention,这么慢不能忍,下面是分析一下原因。

1, 查看导入的wait event,使用了Tanel Poder的snapper

SQL> select event,username,p1,p2,last_call_et,sid,logon_time,program from v$session where username is not null and status='ACTIVE';

EVENT USERNAME P1 P2 LAST_CALL_ET SID LOGON_TIME PROGRAM

---------------------------------- ------------------------------ ---------- ---------- ------------ ---------- -------------- --------------------

control file parallel write HUIFU 2 15 0 1833 27-11月-20 imp.exe

enq: CF - contention HUIFU 1128660997 0 0 2311 28-11月-20 imp.exe

SQL*Net message to client SYS 1111838976 1 0 2671 28-11月-20 sqlplus.exe

SQL> @snapper all 5 1 1833

Sampling SID 1833 with interval 5 seconds, taking 1 snapshots...

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

SID, USERNAME , TYPE, STATISTIC , DELTA, HDELTA/SEC, %TIME, GRAPH , NUM_WAITS, WAITS/SEC, AVERAGES

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

1833, HUIFU , STAT, Requests to/from client , 84, 16.24, , , , , 1 per e

1833, HUIFU , STAT, user calls , 84, 16.24, , , , , 1 per e

1833, HUIFU , STAT, session logical reads , 1191, 230.28, , , , , 14.18 per e

1833, HUIFU , STAT, CPU used when call started , 19, 3.67, , , , , .23 per e

1833, HUIFU , STAT, CPU used by this session , 19, 3.67, , , , , .23 per e

1833, HUIFU , STAT, DB time , 515, 99.57, , , , , 6.13 per e

1833, HUIFU , STAT, user I/O wait time , 127, 24.56, , , , , 1.51 per e

1833, HUIFU , STAT, non-idle wait time , 500, 96.67, , , , , 5.95 per e

1833, HUIFU , STAT, non-idle wait count , 1121, 216.74, , , , , 13.35 per e

1833, HUIFU , STAT, session pga memory , -131072, -25.34k, , , , , -1.56k per e

1833, HUIFU , STAT, enqueue waits , 58, 11.21, , , , , .69 per e

1833, HUIFU , STAT, enqueue requests , 463, 89.52, , , , , 5.51 per e

1833, HUIFU , STAT, enqueue releases , 465, 89.91, , , , , 5.54 per e

1833, HUIFU , STAT, physical read total IO requests , 595, 115.04, , , , , 7.08 per e

1833, HUIFU , STAT, physical read total bytes , 8929280, 1.73M, , , , , 106.3k per e

1833, HUIFU , STAT, physical write total IO requests , 449, 86.81, , , , , 5.35 per e

1833, HUIFU , STAT, physical write total bytes , 6021120, 1.16M, , , , , 71.68k per e

1833, HUIFU , STAT, cell physical IO interconnect bytes , 14950400, 2.89M, , , , , 177.98k per e

1833, HUIFU , STAT, total cf enq hold time , 1845, 356.73, , , , , 21.96 per e

1833, HUIFU , STAT, total number of cf enq holders , 100, 19.33, , , , , 1.19 per e

1833, HUIFU , STAT, db block gets , 923, 178.46, , , , , 10.99 per e

1833, HUIFU , STAT, db block gets from cache , 758, 146.56, , , , , 9.02 per e

1833, HUIFU , STAT, db block gets from cache (fastpath) , 482, 93.19, , , , , 5.74 per e

1833, HUIFU , STAT, db block gets direct , 165, 31.9, , , , , 1.96 per e

1833, HUIFU , STAT, consistent gets , 268, 51.82, , , , , 3.19 per e

1833, HUIFU , STAT, consistent gets from cache , 135, 26.1, , , , , 1.61 per e

1833, HUIFU , STAT, consistent gets from cache (fastpath) , 115, 22.24, , , , , 1.37 per e

1833, HUIFU , STAT, consistent gets - examination , 19, 3.67, , , , , .23 per e

1833, HUIFU , STAT, consistent gets direct , 133, 25.72, , , , , 1.58 per e

1833, HUIFU , STAT, logical read bytes from cache , 7315456, 1.41M, , , , , 87.09k per e

1833, HUIFU , STAT, physical reads , 100, 19.33, , , , , 1.19 per e

1833, HUIFU , STAT, physical reads direct , 100, 19.33, , , , , 1.19 per e

1833, HUIFU , STAT, physical read IO requests , 100, 19.33, , , , , 1.19 per e

1833, HUIFU , STAT, physical read bytes , 819200, 158.39k, , , , , 9.75k per e

1833, HUIFU , STAT, db block changes , 460, 88.94, , , , , 5.48 per e

1833, HUIFU , STAT, physical writes , 165, 31.9, , , , , 1.96 per e

1833, HUIFU , STAT, physical writes direct , 165, 31.9, , , , , 1.96 per e

1833, HUIFU , STAT, physical write IO requests , 165, 31.9, , , , , 1.96 per e

1833, HUIFU , STAT, physical write bytes

' , 1351680, 261.35k, , , , , 16.09k per e

1833, HUIFU , STAT, physical writes non checkpoint , 165, 31.9, , , , , 1.96 per e

1833, HUIFU , STAT, free buffer requested , 1, .19, , , , , .01 per e

1833, HUIFU , STAT, physical reads direct (lob) , 50, 9.67, , , , , .6 per e

1833, HUIFU , STAT, physical writes direct (lob) , 165, 31.9, , , , , 1.96 per e

1833, HUIFU , STAT, shared hash latch upgrades - no wait , 1, .19, , , , , .01 per e

1833, HUIFU , STAT, calls to kcmgcs , 169, 32.68, , , , , 2.01 per e

1833, HUIFU , STAT, calls to kcmgas , 51, 9.86, , , , , .61 per e

1833, HUIFU , STAT, calls to get snapshot scn: kcmgss , 318, 61.48, , , , , 3.79 per e

1833, HUIFU , STAT, redo entries , 421, 81.4, , , , , 5.01 per e

1833, HUIFU , STAT, redo size , 169380, 32.75k, , , , , ~ bytes

1833, HUIFU , STAT, redo size for direct writes , 8580, 1.66k, , , , , 102.14 per e

1833, HUIFU , STAT, redo ordering marks , 1, .19, , , , , .01 per e

1833, HUIFU , STAT, redo subscn max counts , 1, .19, , , , , .01 per e

1833, HUIFU , STAT, file io wait time , 188187, 36.39k, , , , , 2.24k per e

1833, HUIFU , STAT, Effective IO time , 39152, 7.57k, , , , , 466.1 per e

1833, HUIFU , STAT, Number of read IOs issued , 50, 9.67, , , , , .6 per e

1833, HUIFU , STAT, undo change vector size , 13388, 2.59k, , , , , 159.38 per e

1833, HUIFU , STAT, no work - consistent read gets , 1, .19, , , , , .01 per e

1833, HUIFU , STAT, active txn count during cleanout , 18, 3.48, , , , , .21 per e

1833, HUIFU , STAT, cleanout - number of ktugct calls , 18, 3.48, , , , , .21 per e

1833, HUIFU , STAT, index crx upgrade (positioned) , 1, .19, , , , , .01 per e

1833, HUIFU , STAT, lob writes , 84, 16.24, , , , , 1 per e

1833, HUIFU , STAT, index scans kdiixs1 , 1, .19, , , , , .01 per e

1833, HUIFU , STAT, HSC Heap Segment Block Changes , 84, 16.24, , , , , 1 per e

1833, HUIFU , STAT, execute count , 84, 16.24, , , , , ~ execu

1833, HUIFU , STAT, bytes sent via SQL*Net to client , 12768, 2.47k, , , , , 152 bytes

1833, HUIFU , STAT, bytes received via SQL*Net from client , 904906, 174.96k, , , , , 10.77k per e

1833, HUIFU , STAT, SQL*Net roundtrips to/from client , 84, 16.24, , , , , 1 per e

1833, HUIFU , TIME, DB CPU , 187500, 36.25ms, 3.6%, [@ ], , ,

1833, HUIFU , TIME, sql execute elapsed time , 5221045, 1.01s, 100.9%, [##########], , ,

1833, HUIFU , TIME, DB time , 5163529, 998.36ms, 99.8%, [##########], , , 24.87ms unacc

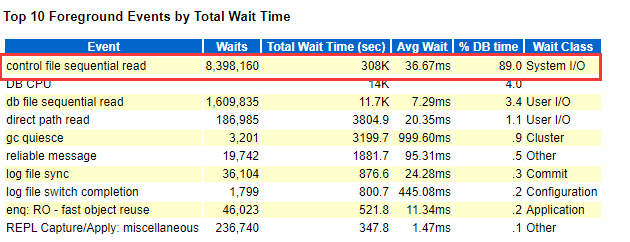

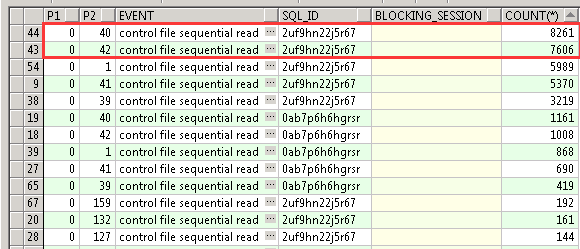

1833, HUIFU , WAIT, control file sequential read , 777869, 150.4ms, 15.0%, [WW ], 515, 99.57, 1.51ms avera

1833, HUIFU , WAIT, control file parallel write , 1593723, 308.14ms, 30.8%, [WWWW ], 144, 27.84, 11.07ms avera

1833, HUIFU , WAIT, db file sequential read , 190925, 36.92ms, 3.7%, [W ], 51, 9.86, 3.74ms avera

1833, HUIFU , WAIT, direct path read , 39362, 7.61ms, .8%, [W ], 51, 9.86, 771.8us avera

1833, HUIFU , WAIT, direct path write , 1064754, 205.87ms, 20.6%, [WWW ], 122, 23.59, 8.73ms avera

1833, HUIFU , WAIT, SQL*Net message to client , 488, 94.35us, .0%, [ ], 85, 16.43, 5.74us avera

1833, HUIFU , WAIT, SQL*Net message from client , 16401, 3.17ms, .3%, [ ], 85, 16.43, 192.95us avera

1833, HUIFU , WAIT, SQL*Net more data from client , 688, 133.02us, .0%, [ ], 33, 6.38, 20.85us avera

1833, HUIFU , WAIT, events in waitclass Other , 1337581, 258.62ms, 25.9%, [WWW ], 59, 11.41, 22.67ms avera

-- End of Stats snap 1, end=2020-11-28 12:53:05, seconds=5.2

----------------------------------------------------------------------------------------------------

Active% | INST | SQL_ID | SQL_CHILD | EVENT | WAIT_CLASS

----------------------------------------------------------------------------------------------------

33% | 1 | 7wf7739g9w3gz | 2 | control file parallel write | System I/O

27% | 1 | 7wf7739g9w3gz | 2 | enq: CF - contention | Other

20% | 1 | 7wf7739g9w3gz | 2 | direct path write | User I/O

13% | 1 | 7wf7739g9w3gz | 2 | control file sequential read | System I/O

2% | 1 | | | ON CPU | ON CPU

2% | 1 | 7wf7739g9w3gz | 2 | ON CPU | ON CPU

2% | 1 | 7wf7739g9w3gz | 2 | db file sequential read | User I/O

-- End of ASH snap 1, end=2020-11-28 12:53:05, seconds=5, samples_taken=45

SQL> select sql_text from v$sqlarea where sql_id='7wf7739g9w3gz';

SQL_TEXT

--------------------------------------------------------------------------- - ------------------------------

INSERT /*+NESTED_TABLE_SET_REFS+*/ INTO "TAB_ANBOB" ("CONTENT", "FILE_UNIQUE_ID") VALUES (:1, :2)

SQL> desc huifu.TAB_ANBOB

名称 类型

---------------- ----------------------------------------------------------

CONTENT BLOB

FILE_UNIQUE_ID VARCHAR2(48)

SQL> SELECT OWNER,LOGGING FROM DBA_TABLES WHERE TABLE_NAME='TAB_ANBOB';

OWNER LOG

------------------------------ ---

HUIFU NO

Note:

表为了减少redo产生配置了nologging. 主要等待事件和时间是control file parallel write和enq: CF – contention

imp 语句

imp huifu/huifu file=E:\xxx.dmp buffer=102400000 feedback=10000 commit=y full=y data_only=yes

Note:

这里是想每10k commit一次,但是包含lob列的表,如果指定了commit=y也是一行一commit.

“For tables containing LONG, LOB, BFILE, REF, ROWID, UROWID columns, array inserts are not done. If COMMIT=y, Import commits these tables after each row.”

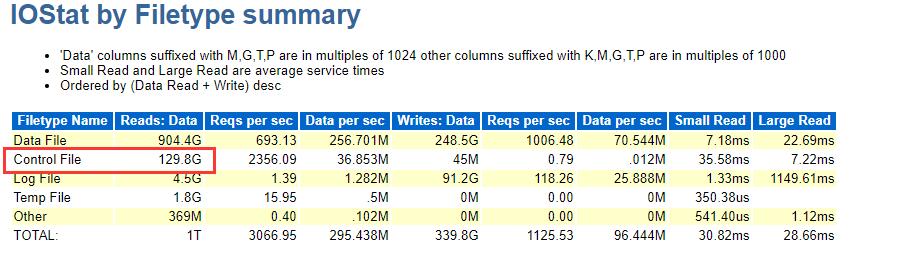

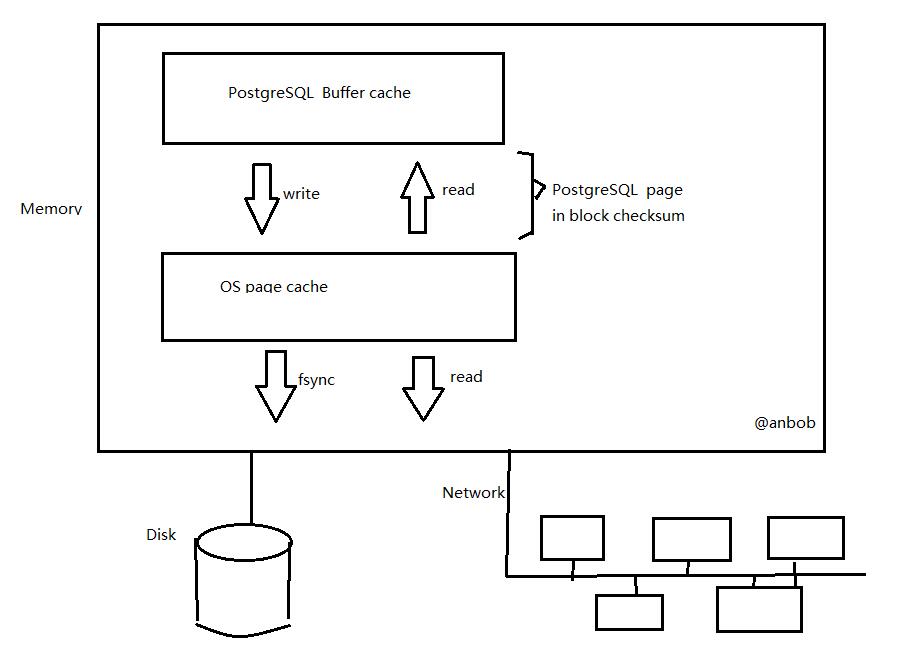

我之前的笔记中Troubleshooting performance event ‘enq: CF – contention’ 记录过most likely that the contention for the CF enqueue is caused by DML on a NOLOGGING object. When the modification work is performed on the data file under the nologging option, the control file needs to be updated in order to modify the unrecoverable SCN.

当修改nologging的对象时,控制文件需要更新unrecoverable SCN, 同时imp lob是每行commit,导致controlfile频繁的更新产生了控制文件相关的等待。 解决方法是启用logging或配置 event 10359 to level 1 跳过更新 unrecoverable SCN’s in the control file. 从11.2.0.2 引入了参数DB_UNRECOVERABLE_SCN_TRACKING

DB_UNRECOVERABLE_SCN_TRACKING enables or disables the tracking of unrecoverable (NOLOGGING) direct-path insert and load operations.

Ixora Said

If your application performs frequent NOLOGGING operations, particularly frequent small changes to NOLOGGING LOBs, then you may find that it also spends a lot of time waiting for control file sequential read and control file parallel write waits. In some real world applications, these waits have been seen to account for as much as 20% of application response time.

Why does Oracle do so much I/O to the controlfile? It is because whenever a datafile is changed by a NOLOGGING operation, the unrecoverable SCN for that datafile, which is stored in the controlfile, needs to be updated. These updates must occur in controlfile transactions to permit recoverability from an instance crash while the controlfile is being changed.

Unfortunately, controlfile transactions are very I/O intensive. Each controlfile transaction requires at least two reads and two data synchronous writes per controlfile copy. If the controlfiles are buffered by the operating system’s file system buffer cache, then the reads may be relatively cheap. Even so, in a typical environment with two or three controlfile copies, a controlfile transaction must nevertheless wait for 4 or 6 random physical writes to the controlfiles.

If your application makes frequent small changes to NOLOGGING LOBs, then it may well be that the controlfile transactions required to update the unrecoverable SCN are actually taking a lot longer than it would take to log the redo for the LOB changes if the LOBs were changed to LOGGING. However, there is a better alternative — namely, setting event 10359. Most numeric events are undocumented, and not normally supported. However, this particular event is sanctioned with a reference in the Oracle9i Application Developer’ Guide – Large Objects (LOBs).

Event 10359 disables all updates of unrecoverable SCNs. By setting this event you can retain the performance benefit of not logging LOB changes without sustaining the performance penalty of repeated foreground controlfile transactions. The only disadvantage is that RMAN will no longer be able to report which datafiles have recently been affected by NOLOGGING operations, and so you will have to adopt a backup strategy that compensates for that.

启用LOGGING的方法, 创建另一用户huifu2下使用logging table, 测试.

SQL> select owner,logGing from dba_tables where table_name='TAB_ANBOB';

OWNER LOG

------------------------------ ---

HUIFU2 YES

HUIFU NO

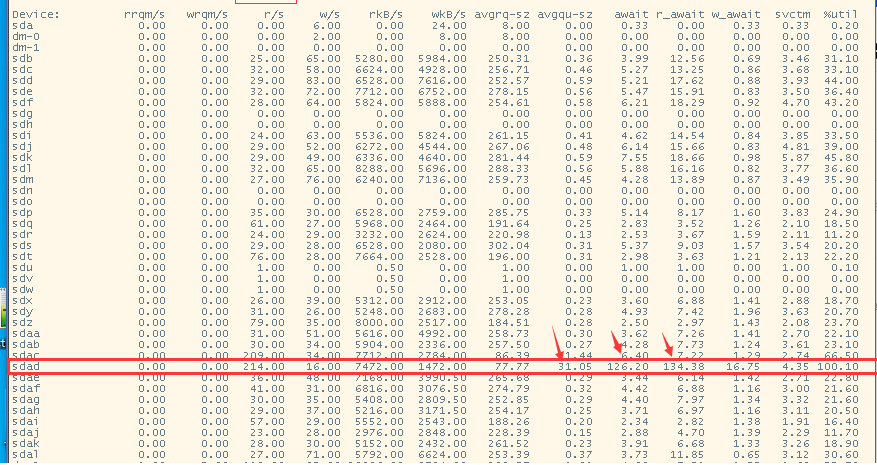

SQL> select event,username,p1,p2,last_call_et,sid,logon_time,program from v$session where username is not null and status='ACTIVE';

EVENT USERNAME P1 P2 LAST_CALL_ET SID

---------------------------------------- ------------------------------ ---------- ---------- ------------ ----------

control file parallel write HUIFU 2 15 0 1833

direct path write HUIFU2 14 1602160 0 2025

enq: CF - contention HUIFU 1128660997 0 0 2311

SQL*Net message to client SYS 1111838976 1 0 2671

SQL> @snapper ash 5 1 2025

----------------------------------------------------------------------------------------------------

Active% | INST | SQL_ID | SQL_CHILD | EVENT | WAIT_CLASS

----------------------------------------------------------------------------------------------------

55% | 1 | 7wf7739g9w3gz | 3 | direct path write | User I/O

24% | 1 | | | log file sync | Commit

8% | 1 | 7wf7739g9w3gz | 3 | direct path read | User I/O

5% | 1 | 7wf7739g9w3gz | 3 | db file sequential read | User I/O

3% | 1 | 7wf7739g9w3gz | 3 | ON CPU | ON CPU

-- End of ASH snap 1, end=2020-11-28 13:28:03, seconds=5, samples_taken=38

速度恢复了正常,wait event转到了lob 数据对象上。